ChatGPT’s Seven-Curriculum That It May Have Learned

Out of simple curiosity, I became curious about what GPT might have studied. Searching for straightforwardly learned data and types seemed a little boring, so I looked up what types of problems were being addressed in the field of natural language processing research.

The website Papers with Code classifies 583 natural language processing research topics, each of which means “researchers around the world are studying the same question.” It is likely that these types of problems were included in a small curriculum of what GPT learned with its vast content.

Among them, I have chosen seven topics that are closely related to daily life.

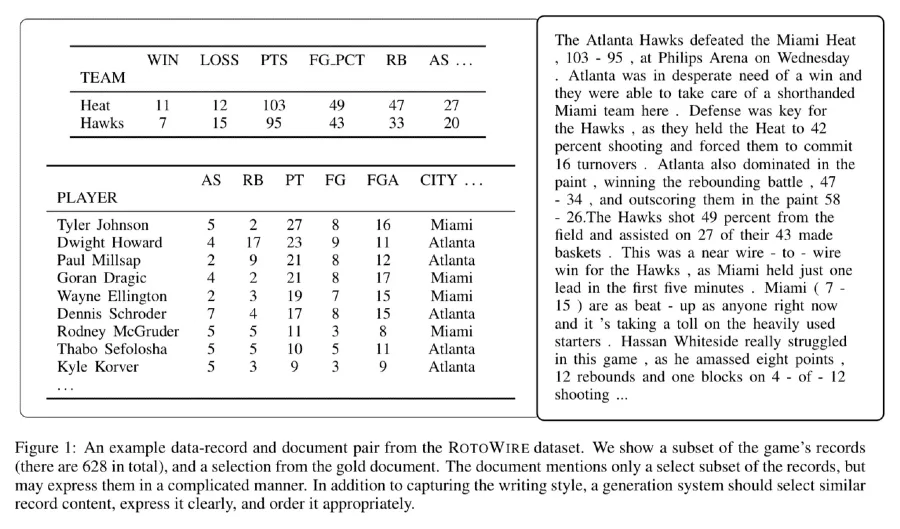

Data to-Text-Generation

This research topic involves generating descriptive text from commonly encountered “table” data. It is a necessary study for productivity of data analysts like myself, and at the same time, it is a study that affects jobs (GPT isn’t the only thing affecting jobs, right?).

Describing and interpreting data can be tricky for machines if they are given too much freedom to imagine or inject intentions. Above all, it must be accurately expressed. And this description is not simple. It must solve various sub-topics simultaneously, such as what to explain, what to focus on, and what expressions to use to increase clarity.

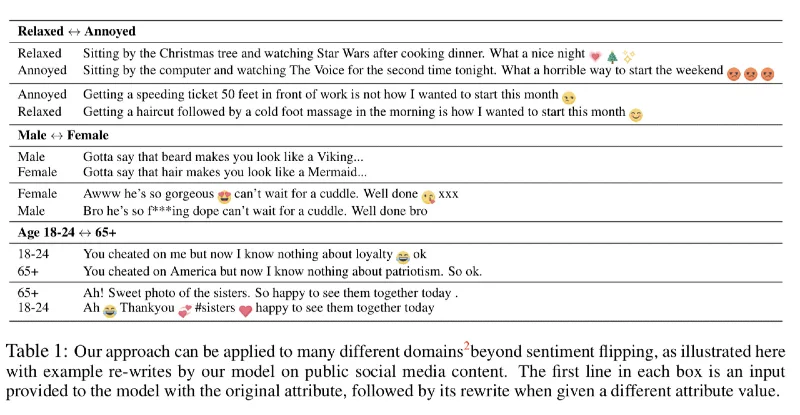

Text Style Transfer

If there is “voice mimicry” in vocal studies, there is “style conversion” in natural language processing. For example, it is the technique of changing the style of speech of different speakers while maintaining the same meaning of the sentence.

You can change “comfortable tone” to “speaking with anger”, “masculine style” to “female speaker’s style”, and “childlike words and sentences” to “adult grammar”.

For instance, converting Elon Musk’s technical explanation about SpaceX into the style of Harry Potter’s speech. It is a technique to change the style of different speakers while maintaining the same meaning of the sentence.

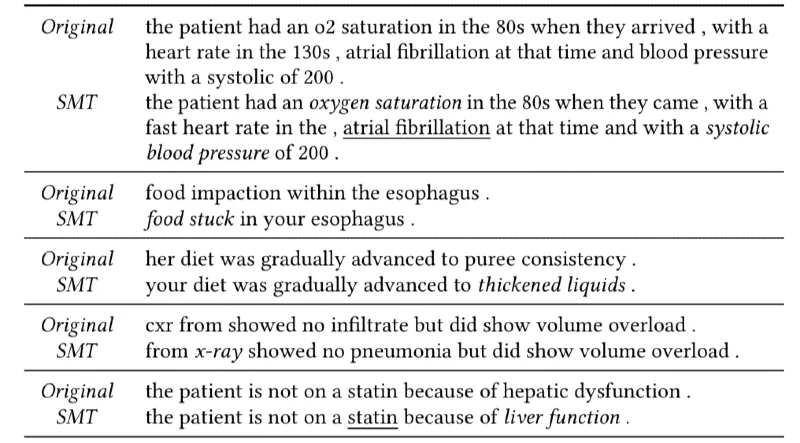

Clinical Language Translation

When our symptoms go beyond a mild cold, even if we hear a doctor’s diagnosis in our native language, it can feel like an alien language. To bridge this gap, there is a research field that translates medical texts full of specialized terminology into a language that the general public can understand.

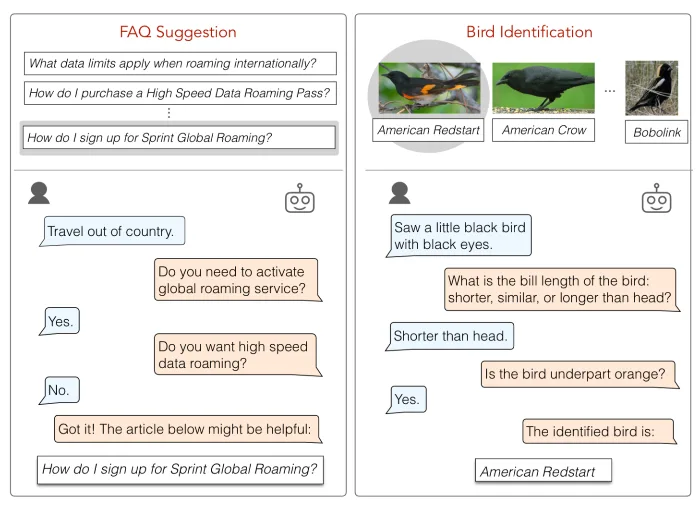

Intent Classification

Simply put, it’s a study on how to understand what someone means even if they don’t say it clearly. For example, in a commerce service, it can help identify whether someone wants to make a purchase, upgrade to a more expensive subscription, or cancel their subscription. If it’s a chatbot, it can identify relevant topics from a few words left by the user, and suggest information that the user might find useful.

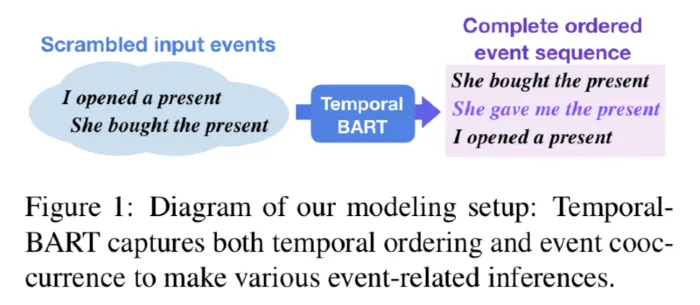

Story Completion

This research aims to fill in the missing parts of a story, even if the plot is not perfectly complete. This can include guessing and seamlessly connecting the missing parts of the main text, not just the conclusion. If the story contains too many technical terms or requires a lot of background knowledge, annotations can be provided to help readers follow along.

The usefulness of this research extends beyond just novels and can be applied in everyday life. Many people fall victim to the “curse of knowledge,” assuming that others already know what they know. It would be helpful if the Story Completion model could intervene as a mediator of knowledge between people.

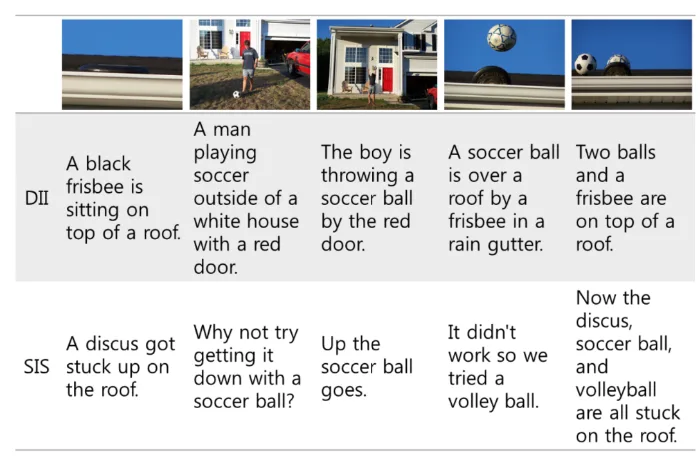

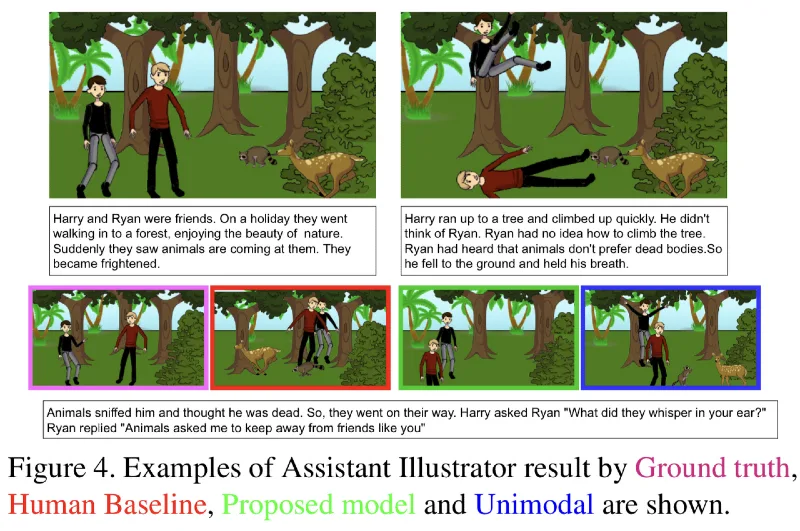

Visual Storytelling

If a picture of a person running out of Manhattan Station were given, two versions of an explanation could be written as follows.

- “There is a person running at the 2nd exit of Manhattan Station. There is a tree, a road, and a car next to it.”

- “Late again today.”

People can understand the context and create a story from a single picture like this. However, it was a difficult task for artificial intelligence. And, providing context to connect multiple images into a coherent story, and finally generating sentences is another level in Visual Storytelling research.

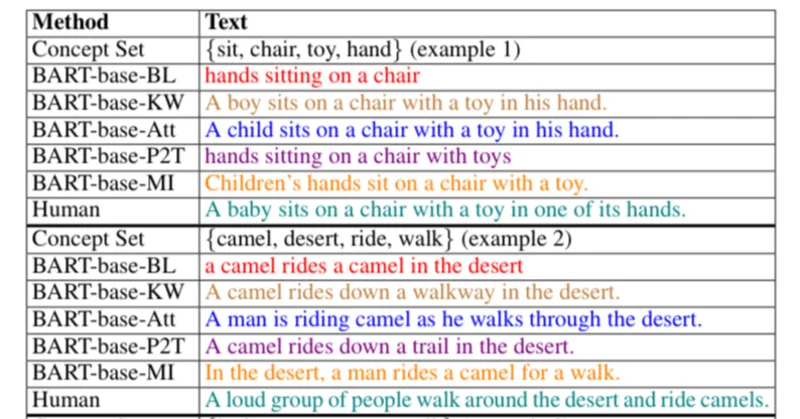

Concept-To-Text Generation

“Generating text based on concept” is slightly different from “Completing a story,” which was mentioned earlier. While completing a story research fills in an empty context, the research being introduced this time creates a plausible sentence with a few given words.

I also assigned a task to GPT4 this time. I asked it to make a sentence with four words: “morning, coffee, bread, subway.” It seems like an easy task for GPT now.

He woke up early in the morning, poured himself a cup of coffee, took a bite of warm bread, and headed to the subway station for work.

The selected contents from this article are just a few of the various research topics. In addition to natural language processing, the field of artificial intelligence research includes speech recognition, image/video generation, and more, which are endless. You can also brainstorm by browsing through the topics that have been researched so far and think, “I’ve learned these types, so I can expand with those types of questions.” Please also visit this site to explore some great ideas.